北理百家大讲堂ONLINE

中国科学院人工智能联盟标准组

北京理工大学研究生院

北京理工大学计算机学院

共同主办

元月的精彩

1 月7日

15:0--17:0

北理百家大讲堂再次带来一场学术盛宴!

可信人工智能讲堂

第七讲

Group-Theoretic Self-Supervised Representation Learning

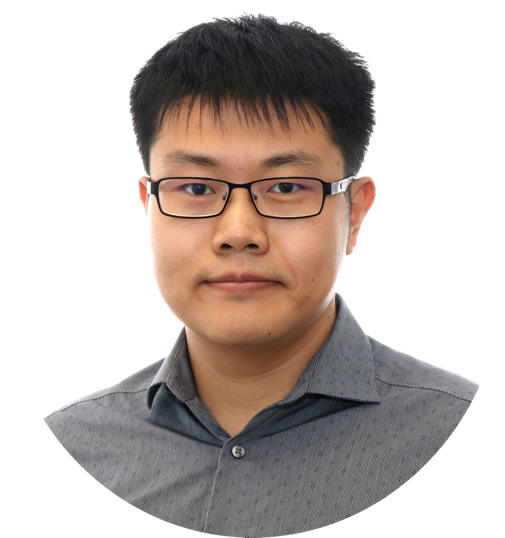

Hanwang Zhang

Nanyang Technological University's School

Hanwang Zhangis an Assistant Professor at Nanyang Technological University's School of Computer Science and Engineering. His research interests include Computer Vision, Natural Language Processing, Causal Inference, and their combinations. His work has received numerous awards including the IEEE AI’s-10-To-Watch 2020, TMM Prize Paper Award 2020, Alibaba Innovative Research Award 2019, ACM ToMM Best Paper Award 2018, Nanyang Assistant Professorship 2018, ACM SIGIR Best Paper Honourable Mention Award 2016, and ACM MM Best Student Paper Award 2012. Hanwang and his team work actively in causal inference for connecting vision and language. For example, their scene graph detection benchmark won the IEEE CVPR Best Paper Finalist 2019 and their visual dialog agent won the 1st place in Visual Dialog Challenge 2019 and 2nd place in 2018/2020.

ABSTRCT

A good visual representation is an inference map from observations (images) to features (vectors) that faithfully reflects the structure and transformations of the underlying generative factors (semantics), who are invariant to environmental changes. In this paper, we formulate the notion of ``good'' representation from a group-theoretic view using Higgins' definition of disentangled representation, and show that existing Self-Supervised Learning (SSL) can only learn augmentation-related features such as lighting and view shifts, leaving the rest of high-level semantics entangled. To break the limitation, we propose an iterative SSL method: Iterative Partition-based Invariant Risk Minimization (IP-IRM), which successfully grounds the abstract group actions into a concrete SSL optimization. At each iteration, IP-IRM first partitions the training samples into subsets. In particular, the partition reflects an entangled semantic group action. Then, it leverages IRM to learn subset-invariant sample similarities, where the invariance guarantees to disentangle the corresponding semantic. We prove that IP-IRM converges with a full-semantic disentangled representation, and show its effectiveness on various feature disentanglement and SSL benchmarks.

1 月7日(周五)

15:0--17:0

参会方式

腾讯会议

会议主题:

CASAIScientificFrontiersLectureSeries

腾讯入会:

会议 ID:873-681-984

4000520066 欢迎批评指正

All Rights Reserved 新浪公司 版权所有